The Trust Collapse: Why 84% Use AI But Only 33% Trust It

- 5 minutes - Feb 19, 2026

- #ai#trust#adoption#teams#verification

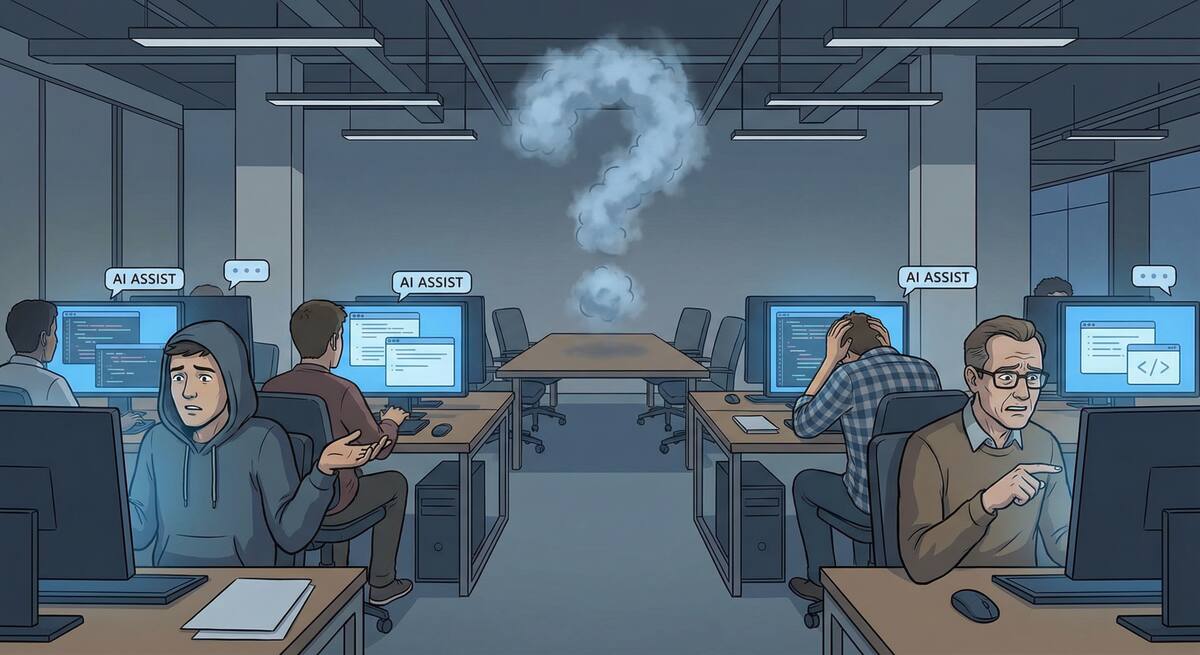

Usage of AI coding tools is at an all-time high: the vast majority of developers use or plan to use them. Trust in AI output, meanwhile, has fallen. In recent surveys, only about a third of developers say they trust AI output, with a tiny fraction “highly” trusting it—and experienced developers are the most skeptical.

That gap—high adoption, low trust—explains a lot about why teams “don’t see benefits.” When you don’t trust the output, you verify everything. Verification eats the time AI saves, so net productivity is flat or negative. Or you use AI only for low-stakes work and conclude it’s “not for real code.” Either way, the team doesn’t experience AI as a performance win.

If you want your team to actually see performance benefits from AI in their workflows, you have to address the trust problem. Here’s what’s going on and how to start fixing it.

Why Trust Dropped

Accuracy and reliability: People have been burned. Wrong code, wrong docs, plausible-sounding nonsense. Once that happens a few times, the default becomes “assume it’s wrong until I check.”

No way to calibrate: With a human colleague, you learn when to trust them (“Jane is usually right on APIs, double-check on security”). With AI, every response looks equally confident. There’s no built-in signal for “this is more likely wrong,” so people treat everything as suspect.

Visibility into reasoning: You can’t ask the model “why did you do it that way?” So when something is wrong, you don’t get a clear mental model of how to correct it—you just see that it’s wrong again. That undermines trust over time.

Pressure and blame: If something goes wrong and “AI wrote it,” the human still gets the blame. So the rational move is to verify heavily or avoid AI for anything that matters—which keeps trust low and benefits invisible.

How Low Trust Blocks Benefits

For teams struggling to see AI performance benefits, the cycle looks like this:

- Team tries AI.

- They get a few wrong or insecure answers.

- Trust drops; they verify everything or restrict AI to trivial tasks.

- Net speed doesn’t improve (or drops), so they report “no benefit.”

- They use AI less for high-impact work, so they never build evidence that it can help when used well.

The tool can be capable, but if trust is broken, the team will never get to the point where benefits show up in their actual workflow.

Rebuilding Trust So Benefits Can Show Up

You can’t fix this with a memo. You fix it by creating conditions where the team can safely learn when to trust and when to verify.

Start With High-Trust, Low-Stakes Use Cases

Use AI first where mistakes are cheap and wins are obvious: internal docs, tests, refactors in well-covered code, boilerplate. Publish the wins: “We generated these runbooks in a day.” “We added these tests with AI and they caught X.” Let the team see that when the stakes are right, AI does help. Trust grows when good outcomes are visible and failures aren’t catastrophic.

Make Verification Explicit and Bounded

Don’t ask people to “trust more.” Give them a clear verification rule so that trust is earned in a controlled way. For example:

- Tier 1 (low risk): Docs, tests, internal tooling—review for sense, not line-by-line.

- Tier 2 (medium risk): Feature code, non-security APIs—targeted review and tests.

- Tier 3 (high risk): Auth, payments, security-sensitive paths—full review and extra checks.

When the team knows that Tier 1 won’t blow up production and Tier 3 gets real scrutiny, they can relax a bit on Tier 1 and invest trust where it’s safe. That’s when you start to see net speed gains.

Share When AI Is Wrong (And How You Caught It)

Turn failures into process improvements. When someone finds a bad suggestion or a bug from AI code, document it in a blameless way: “Here’s what we did, how we caught it, and what we’ll do next time (e.g. add a test, add a review step).” That does two things: it shows that verification works, and it builds a shared sense of where AI tends to fail. Over time, people trust the process instead of having to trust the model blindly.

Give People Permission to Opt Out or Restrict Use

If AI is mandatory and someone gets burned, they’ll never trust it. If they can say “I’m not using AI for this” or “I’m only using it for X,” they’re more likely to try it where they’re comfortable and expand from there. Optional, staged adoption lets trust build instead of forcing it.

Measure Trust and Experience, Not Just Usage

Add a couple of lightweight survey questions: “How much do you trust AI output for [specific use case]?” “How much did verification slow you down this week?” Track trends. If trust is low or verification cost is high, you know you need to work on use-case fit and guardrails before pushing for more adoption.

Connecting Trust to “Seeing Benefits”

For a team that’s struggling to see any performance benefits from AI in their software engineering workflows, the sequence is:

- Acknowledge the trust problem – “We use it but we don’t trust it” is a valid state. Don’t dismiss it.

- Narrow the use cases – Focus on places where mistakes are safe and wins are visible. Let trust grow there.

- Make verification part of the workflow – So that when AI is wrong, you catch it without drama. That reinforces that the system is under control.

- Celebrate and measure outcomes – “We shipped this faster,” “We caught this in review.” Tie those to AI use so the team sees that trust, when calibrated, leads to benefit.

- Expand gradually – As trust and evidence build, extend AI to more tasks. Don’t jump to “use it for everything” before the team has seen clear wins.

When trust is low, more AI often means more verification and more fatigue—no visible benefit. When trust is deliberately rebuilt through safe use cases, clear rules, and visible wins, the same tools start to deliver the performance benefits that struggling teams are looking for.